Fourth generation iPod touch camera focal lengths

Late one night soon after I bought my fourth generation iPod touch, I did some sloppy measurements to try figure out the 35mm equivalent focal length of each of its two cameras. Here is a sloppy summary of my findings.

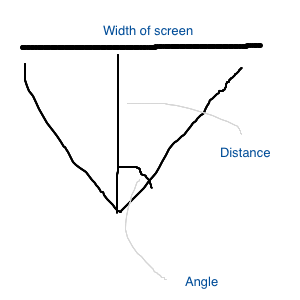

The display on my MacBook is 11 5/16 inches wide (287.3375 mm). It fills the back (”720p”) camera width at a distance of 14 3/16 inches (360.3625 mm). It fills the front (”FaceTime”) camera width at distance of 11 inches (279.4 mm).

Using some basic trigonometry:

a = 2 arctan (d/2f) # a = angle, d = dimension (my "width"), f = focal length, or, subject distance

…we can find each camera lens’s angle of view:

2*Math.atan2(287.3375, 2*360.3625) = 0.7587331199535923 radians (43.47 degrees)

2*Math.atan2(287.3375, 2* 279.4) = 0.9498931263447237 radians (54.42 degrees)

Standard 135 film is 35mm wide, and it is on this format I wanted to figure out the iPod lens equivalent. I massaged the angle of view calculation into a form that could yield a focal length based on an angle:

tan(a/2) = d/2/f

For 35mm equivalent, I plugged in 35 for d (”dimension”, my “width”) and solved for focal length as a function of angle:

tan(a/2) = 17.5/f

f = 17.5/tan(a/2)

So, the front (”720p”) camera has a focal length equivalent to a 44.9mm lens on a 35mm film camera body (or a 27.44mm lens on an APS-C body). The back “FaceTime” camera is wider, equivalent to a 34.0mm lens on a 35mm film body (or a 21.25mm lens on an APS-C body)

Then I looked at the EXIF metadata to see what it says about the camera. For the 720p camera, the metadata records a focal length of 3.9mm. If my 35mm equivalent focal length calculations are correct, this means a crop factor of 11.26 and thus a 3.11mm sensor width.

Now for the FaceTime camera, the EXIF metadata records a focal length of 3.9mm. Again? So allegedly this would be a 8.72 crop factor and 4.02mm sensor size. However, this camera is lower resolution (640×480 versus 960×720) and I have a hard time believing that it is a larger sensor. (If it were, the per-pixel area would be significantly larger and I’d expect much better quality and low light performance than the back camera.) I suspect the focal length metadata is (or at least was when I first looked…I should check again on the latest iOS) simply wrong for pictures taken with the FaceTime camera.